What is Thermodynamic Computing and how does it help AI development?!

What is Thermodynamic Computing and how does it help AI development?!

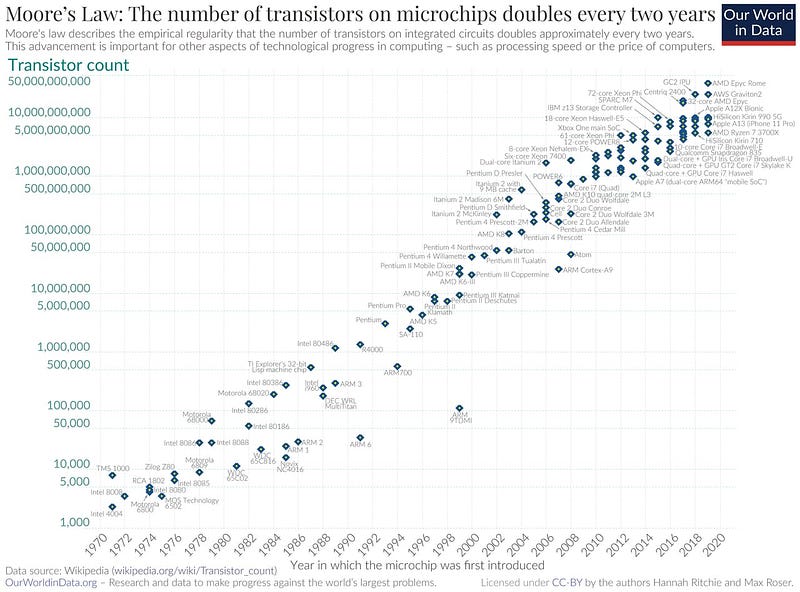

The foundation of modern computing is the transistor, a miniature electronic switch from which logic gates can be constructed, creating complex digital circuits like CPUs or GPUs. With the advancement of technology, transistors have become progressively smaller. According to Moore’s Law, the number of transistors in integrated circuits approximately doubles every 2 years. This exponential growth has enabled the exponential development of computing technology. However, there is a limit to how much the size of transistors can be reduced; we will soon reach a threshold below which transistors cannot function. Moreover, the advancement of AI has made the need for increased computational capacity more critical than ever before.

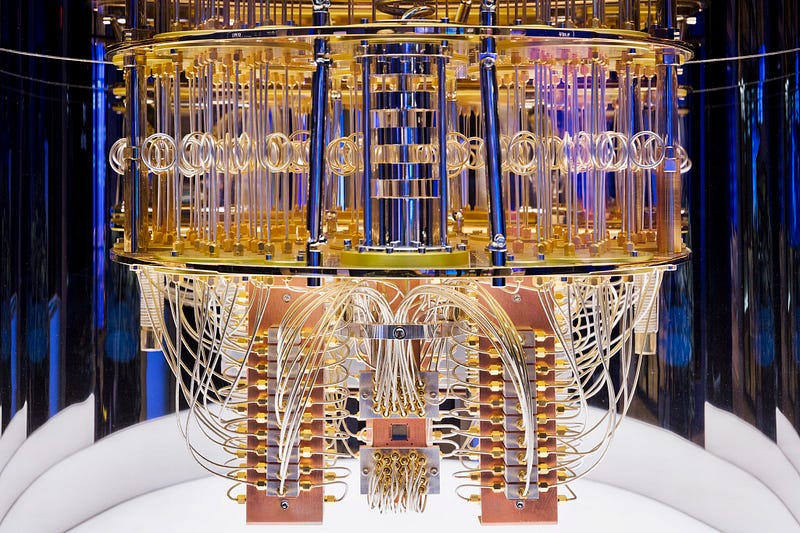

The fundamental issue is that nature is stochastic (unpredictable). And here, I’m not just referring to quantum mechanical effects. Environmental influences, thermal noise, and other disruptive factors must be considered when designing a circuit. For a transistor, the expectation is that it operates deterministically (predictably). If I run an algorithm 100 times in succession, I must get the same result every time. Currently, transistors are large enough that these factors do not interfere with their operation, but as their size is reduced, these issues will become increasingly relevant. So, what direction can technology take from here? The “usual” answer: quantum computers.

In fact, with quantum computers, we encounter the same issue: the need to eliminate environmental effects and thermal noise. This is why quantum computers must be cooled to temperatures near absolute zero. These extreme conditions preclude quantum processors from replacing today’s CPUs. But what could be the solution? It appears that to move forward, we must abandon our deterministic computers and embrace the stochastic nature of the world. This idea is not new. It’s several billion years old.

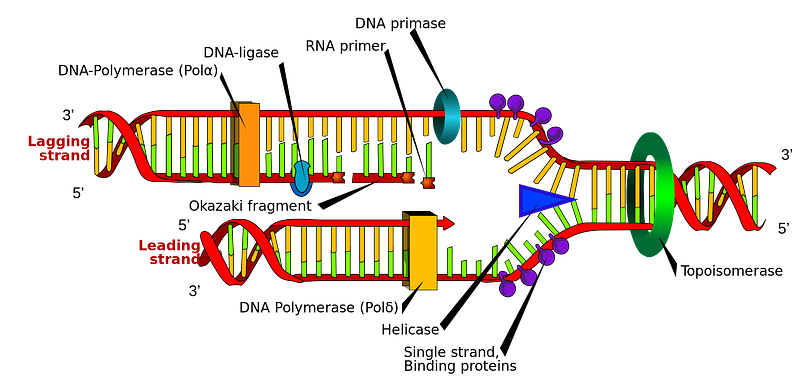

Educational videos often depict the functioning of cells as little factories, where everything operates with the precision of clockwork. Enzymes, like tiny robots, cut up DNA, to which amino acids attach, leading to the production of proteins. These proteins neatly interlock and, during cell division, separate from the old cell to form a new one. However, this is a highly simplified model. In reality, particles move entirely at random, and when the right components happen to come together, they bind. While human-made structures operate under strict rules, here processes form spontaneously under the compelling influence of physical and chemical laws. Of course, from a bird’s-eye view, the system might appear to function with the precision of a clockwork.

A very simple example is when we mix cold water with hot water. It would be impossible to track the random motion of each particle. Some particles move faster, while others move slower. Occasionally, particles collide and exchange energy. The system is entirely chaotic, requiring immense computational capacity to simulate. Despite this, we can accurately predict that after a short period, the water will reach a uniform temperature. This is also a simple self-organizing system that is very complex at the particle level, yet entirely predictable due to the laws of physics and the rules of statistics. Similarly, cell division becomes predictable as a result of complex chemical processes and random motion. Of course, errors can occur. The DNA may not copy correctly, mutations may develop, or other errors may occur. That’s why the system is highly redundant. Several processes will destroy the cell in case of an error (apoptosis), thus preventing faulty units from causing problems (or only very rarely, which is how diseases like cancer can develop).

The energy consumption of a transistor can be comparable to the energy consumption of a cell, even though a cell is orders of magnitude more complex. Imagine the complex calculations we could perform with such low consumption if we carried them out in an analog manner, exploiting the laws of nature.

In biology, thermal noise is not only not a problem, but it is necessary. Below certain temperatures, biological systems are incapable of functioning. It is the random motion induced by heat that powers them.

The foundation of thermodynamic computing is similar. Instead of trying to eliminate the stochastic nature of physical processes, we utilize it. But what can be done with a computer whose operation is non-deterministic?

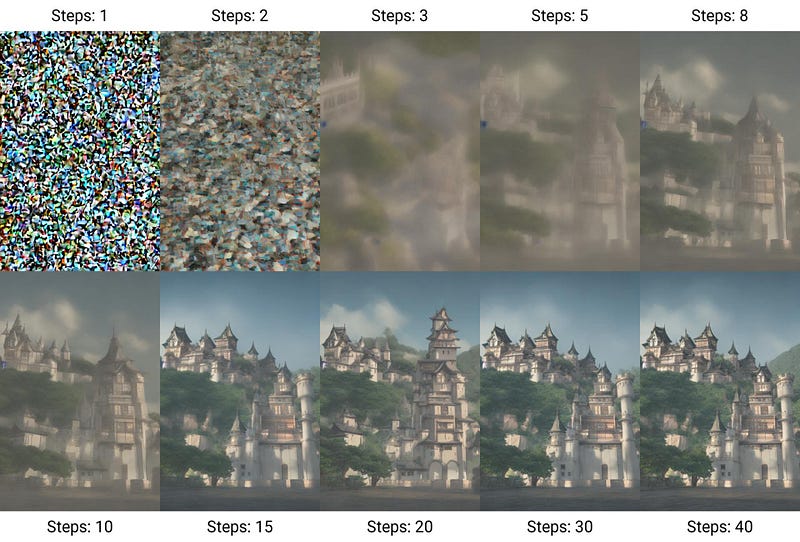

In fact, in the field of machine learning, there are many random components. For example, in the case of a neural network, the initial weights are randomly initialized. The dropout layer, which eliminates overfitting, also randomly discards inputs. But at a higher level, for instance, diffusion models also use random noise for their operation. In the case of Midjourney, for example, the model was trained to generate images from random noise, taking into account the given instructions.

Here, a bit of noise is added to the image at every step until the entire image becomes noise. The neural network is then trained to reverse this process, that is, to generate an image from noise based on the given text. If the system is trained with enough images and text, it will be capable of generating images from random noise based on text. This is how Midjourney operates.

In current systems, we eliminate the random thermal noise to obtain deterministic transistors, and then on these deterministic transistors, we simulate randomness, which is necessary for the operation of neural networks. Instead of simulation, why not leverage nature’s randomness? The idea is similar to that of any analog computer. Instead of digitally simulating a given process, we should utilize the opportunities provided by nature and run it in an analog manner.

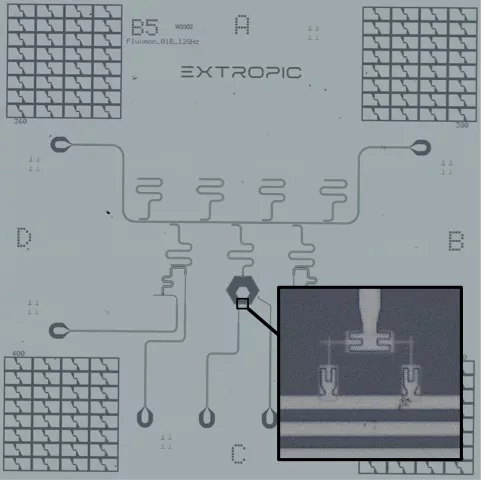

The startup Extrophic is working on the development of such a chip. Like Google, the company was founded by two guys: Guillaume Verdon and Trevor McCourt. Both worked in the field of quantum computing before founding the company, and their chip lies somewhere halfway between traditional integrated circuits and quantum computers.

Extropic’s circuit works in an analog manner. The starting state is completely random, normally distributed thermal noise. Through programming the circuit, this noise can be modified within each component. Instead of transistors, analog weights take their place, which are noisy, but the outcome can be determined through statistical analysis of the output. The guys call this probabilistic computing.

These analog circuits are much faster and consume much less energy, and since the thermal noise is not only non-disruptive but an essential component of the operation, they do not require the special conditions needed by quantum computers. The chips can be manufactured with existing production technology, so they could enter the commercial market within a few years.

As we have seen from the above, Extropic’s technology is very promising. However, what personally piqued my interest is that it is more biologically plausible. Of course, I don’t think that the neurons in artificial neural networks have anything to do with human brain neurons. These are two very different systems. However, the human brain does not learn through gradient descent. Biological learning is something entirely different, and randomness certainly plays a significant role in it.

As I mentioned, in biology and nature, everything operates randomly. What we see as deterministic at a high level is just what statistically stands out from many random events. This is how, for example, many living beings (including us humans) came to be through completely random evolution yet are built with almost engineering precision. I suspect that the human brain operates in a similar way to evolution. A multitude of random events within a suitably directed system, which we perceive from the outside as consistent thinking. This is why genetic algorithms were so intriguing to me, and now I see the same principle in Extropic’s chip.

Although the standard way of training neural networks is gradient descent and backpropagation, some other players are…thebojda.medium.com

If you are interested, check the company homepage or this interview with the founder guys.